Online censorship as a weapon: The human cost of digital Iron Dome

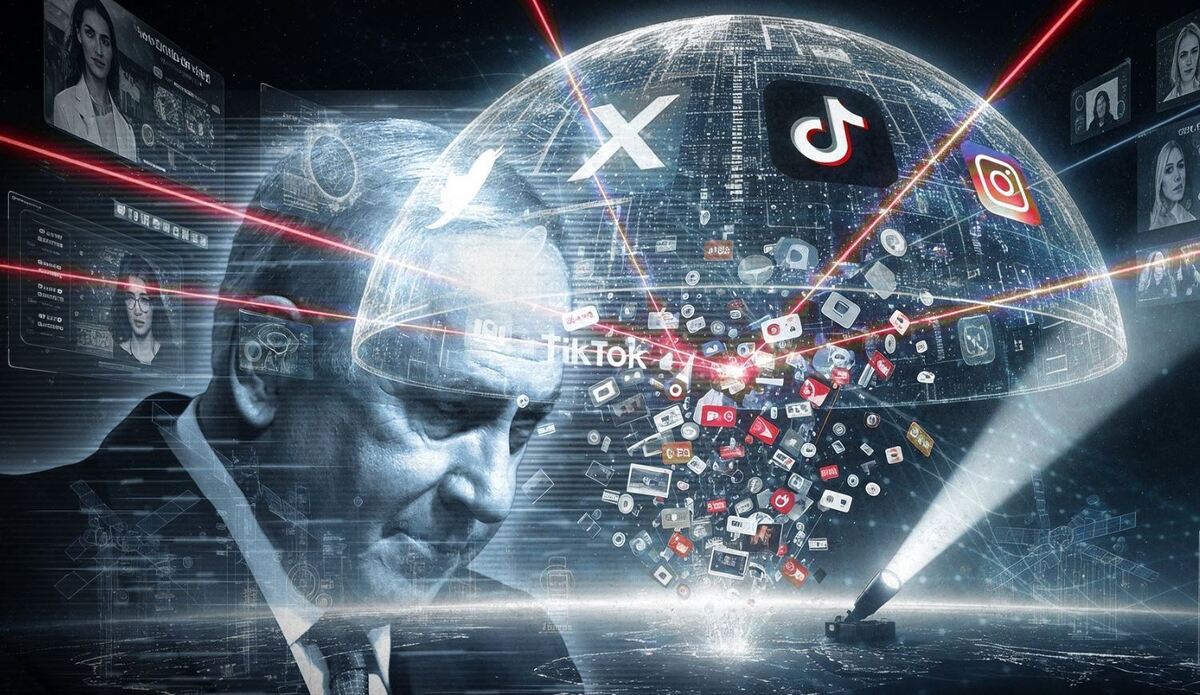

For years, Israel has invested heavily in shaping its image online. Its latest initiative, called the Digital Iron Dome, represents a new level of sophistication in the information war.

The platform (lp.digitalirondome.com), marketed as a civilian defense tool, invites users worldwide to join a “digital army” tasked with countering so-called misinformation and defending Israel online.

However, a closer look reveals a different reality. The platform operates less as a neutral fact-checking tool and more as a coordinated influence operation. Users are encouraged to register and access pre-written posts, hashtags, and visual content optimized for wide sharing on X, Instagram, and TikTok.

By centralizing narrative control in this way, the platform effectively delegates public diplomacy to civilians while framing institution-aligned messaging as grassroots activism.

Shaping the narrative through algorithmic control

Digital Iron Dome transforms ostensibly organic online support into a highly engineered content amplification system, aimed at shaping global perceptions of Israel’s genocide in Gaza and countering criticism through algorithmic dominance.

The platform presents itself as a 24/7 digital defense system and Israel’s first pro-Israel influence engine, claiming to monitor the web for anti-Israel narratives, produce fact-based counter content, and juxtapose targeted ads alongside posts deemed biased or anti-Semitic. Its homepage cites striking metrics: over 300 million targeted ads delivered, more than 200,000 websites reached, and a call for donations.

Claims vs. Reality

Independent review raises questions about transparency and the platform’s actual scope:

- Journalistic support: It functions more as a promotional campaign than a newsroom, blending campaign branding and donation requests with claims of AI-based narrative detection.

- Unverified metrics: Reported reach and engagement figures lack third-party auditing.

- Financial opacity: While donations are solicited via PayPal, no formal legal or financial reporting structure is disclosed.

- Limited founder transparency: The founders’ professional backgrounds are partially documented, with potential conflicts of interest unclear.

- Tech claims as marketing: References to AI monitoring and content injection appear more like product marketing than verifiable functionality.

- Coordinated amplification: Multiple domains and social media ads reveal a systematic campaign effort.

Bias in silencing Palestinian voices online

Ali Hadi Zeineldin, an AI engineer and head of AI at a consulting firm, warned that focusing solely on the technical mechanics of the Digital Iron Dome risks obscuring a far deeper issue.

He argued that the real story is not in the code, but in the unequal digital battlefield on which it operates. In an era where frontlines are increasingly digital, the platform enters a space already distorted by entrenched inequities—from algorithmic bias to economic marginalization and platform moderation practices that disproportionately silence Palestinian voices. These imbalances not only create opportunities for such campaigns, but amplify them.

Independent research confirms these concerns. Studies show that major platforms—including Meta, Facebook, and Instagram—apply double standards to Palestinian-related content.

A report by the Middle East Institute revealed that Meta quietly lowered the threshold for removing Arabic or Palestinian content from 80% to 25%, making it far more likely that Palestinian posts would be deleted or banned.

Human Rights Watch documented over 1,500 cases of removal or suppression of peaceful pro-Palestinian content on Meta platforms in October–November 2023, compared to just one case supporting Israel.

Zeineldin emphasized: in modern conflicts, algorithms and advertising policies have replaced tanks and trenches. When platform moderation already undermines Palestinian voices, projects like Digital Iron Dome do not create imbalance—they exploit it.

Exploiting algorithmic asymmetry

Economic marginalization further compounds this digital exclusion. Wired highlighted a case of a content creator from the occupied West Bank whose YouTube videos received millions of views. Despite this, he remained barred from monetization—not for content violations, but because the YouTube Partner Program is inaccessible in his location (Palestine).

This systemic restriction limits not only potential income but algorithmic reach, reducing the visibility of Palestinian narratives before they enter global discourse. Zeineldin argued that what is happening goes beyond a clash of viewpoints: it is a deliberate exploitation of algorithmic asymmetry.

The same systems designed to ensure fairness, moderation rules, monetization access, and advertising transparency are reinforcing pre-existing online geopolitical hierarchies.

Experts conclude that the Digital Iron Dome thrives not because of technological innovation, but because it exploits a digital playing field already tilted in its favor. Its success does not come from being more advanced—it comes from operating in a landscape that has been pre-engineered to benefit it. Without transparency, equity, and meaningful platform accountability, this asymmetry remains the invisible architecture of modern information warfare.